How to Integrate OneDrive for Business with Dynamics 365 Customer Engagement

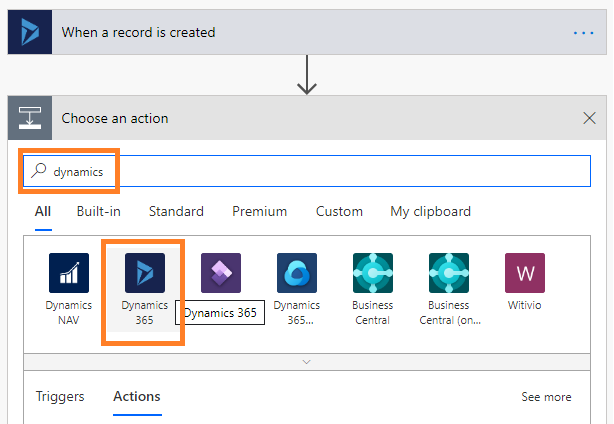

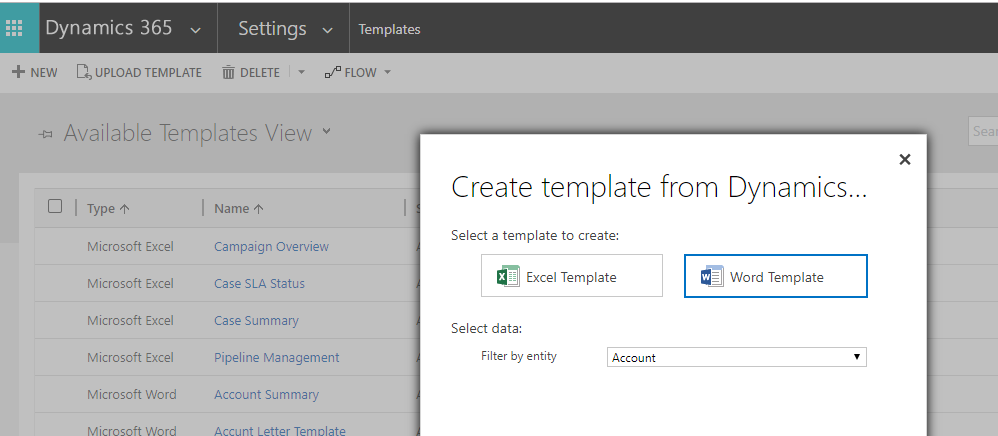

Integrating OneDrive with Dynamics 365 is preety much straight forward. Follow the steps below to understand the process of integration. Step-1 : Enable the Integration Settings in Dynamics 365 CE Login Dynamics 365 CE and navigate to Advanced Settings-> Settings-> Document Management and then click on “Enable OneDrive for Business”. Click the checkbox and proceed…

Read more